In the modern era of high-performance computing and artificial intelligence, NVIDIA’s GPU technology plays a crucial role in deep learning and large-scale data processing. Two of NVIDIA’s most notable GPU platforms are NVIDIA DGX and NVIDIA HGX. Despite their similar names, they represent different approaches from NVIDIA in selling 8x GPU systems with NVLink technology. Additionally, NVIDIA’s business model has evolved between the P100 “Pascal” and V100 “Volta” generations, and we have witnessed the HGX model reaching new heights with the introduction of the A100 “Ampere” and H100 “Hopper” GPUs.

One of the simplest ways to understand the distinction between NVIDIA HGX and NVIDIA DGX is by considering the following:

- NVIDIA DGX is the system brand of NVIDIA.

- NVIDIA HGX is a NVIDIA-authorized platform for third-party OEMs to build systems with 8x NVLink GPUs and NVSwitch technology.

What is NVIDIA DGX?

DGX is a series of high-performance computing systems launched by NVIDIA that integrate GPUs and deep learning software. It is designed to provide powerful computing capabilities and development environments for deep learning researchers and data scientists. The DGX platform also comes preloaded with NVIDIA’s deep learning software libraries and tools, such as CUDA and TensorRT, enabling users to quickly engage in deep learning tasks.

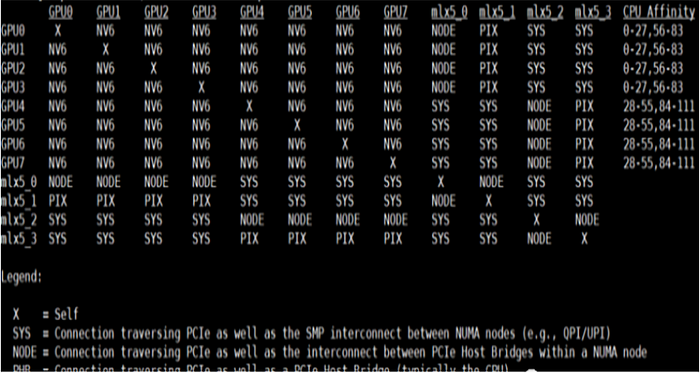

The DGX system utilizes NVIDIA’s proprietary GPU architecture and is equipped with multiple high-performance GPUs, typically 8 in number, which are interconnected through NVLink technology to achieve low-latency and high-bandwidth data transfer.

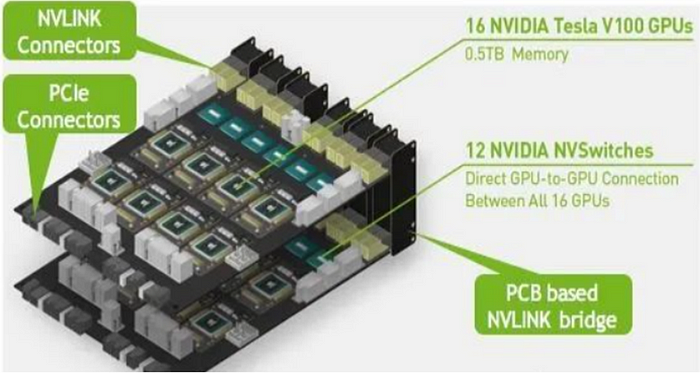

Initially, NVIDIA’s vision was to combine two standardized circuit boards with a larger switching fabric to achieve efficient communication between GPUs on the NVIDIA NVSwitch chip, while maintaining a standardized PCIe topology. This innovation led to the birth of HGX, providing users with outstanding GPU platform performance and reliability.

What is NVIDIA HGX?

The NVIDIA HGX series is a hardware specification for a more flexible and customizable GPU platform. HGX stands for “Hyperscale Graphics eXtension,” and it is a modular hardware design that defines hardware interfaces and interconnect standards, allowing partners to build custom GPU systems tailored to their needs, including GPUs, interconnect technologies, and other key components that can be integrated with their hardware platforms. This modular design enables HGX to meet the requirements of different scales and application scenarios, from data centers to supercomputers.

NVIDIA has made significant contributions to standardizing the 8x SXM GPU platform. By adopting Broadcom PCIe switches, NVIDIA achieved consistency in connectivity with the host and InfiniBand. Additionally, the introduction of the innovative NVSwitch, based on the NVLink structure, greatly enhanced the communication performance between GPUs.

How did NVIDIA HGX appear? What is the difference between it and DGX?

Perhaps the simplest way to approach this is to consider the NVIDIA DGX series as the standard for NVIDIA. It still revolves around the NVIDIA HGX 8x GPU and NVSwitch base, but it is a specifically designed solution by NVIDIA. The trend for DGX is NVIDIA providing higher levels of integration in terms of networking, to be installed in devices like DGX SuperPOD for clustered DGX systems.

When deploying a DGX H100 cluster, which is considered the gold standard for GPU design, optical connectivity becomes essential for data transmission. It’s crucial to choose flat-top optical transceivers, such as the OSFP 800G 2xSR4 and 2xDR4 flat-top modules, to ensure compatibility with the DGX H100 system. This specific design choice allows for seamless integration with the DGX H100, ensuring optimal data transfer and connectivity performance.

While some customers may seek even more customized solutions, NVIDIA offers the HGX H100 platform. This allows OEMs to tailor their designs, with options for denser configurations, CPU choices (e.g., AMD or ARM for more cores), different Xeon SKU levels, varying RAM and storage configurations, and even a range of NICs.

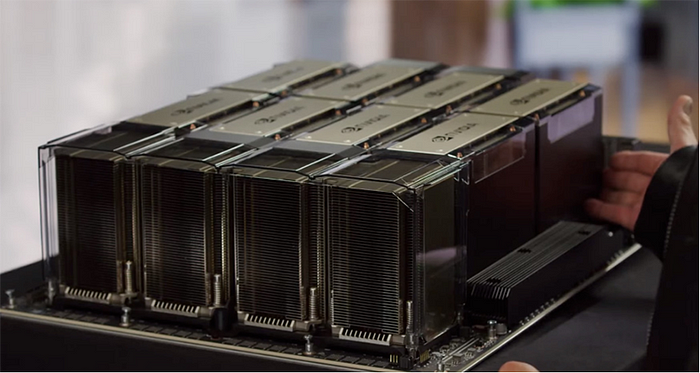

In the next generation of products, NVIDIA recognized that by increasing power, GPUs can accomplish more work, but it also generates more heat. To address this issue, they introduced the liquid-cooled NVIDIA HGX A100 “Delta” platform, providing improved heat dissipation performance. Additionally, in the latest generation of products like “Hopper,” the NVIDIA HGX A100 components require larger heatsinks to accommodate higher-power GPUs and the higher-performance NVSwitch architecture. Therefore, they introduced the “Delta Next” version of the NVIDIA HGX H100 platform to meet this challenge.

With the NVIDIA DGX H100, NVIDIA has taken a step further. It features the new NVIDIA Cedar 1.6Tbps InfiniBand module, with each module containing four NVIDIA ConnectX-7 controllers. Through the acquisition of Mellanox, NVIDIA has shifted its focus towards InfiniBand, and this serves as a prime example. This evolution has also led to improvements in the HGX platform.

NVIDIA HGX: A Popular Choice for AI Computing Platforms

The NVIDIA HGX A100 and HGX H100 have been popular offerings ever since they were disclosed as platforms used by OpenAI and ChatGPT. Server vendors can directly purchase the 8x GPU components from NVIDIA, eliminating the risk of applying thick layers of thermal paste on the GPUs. This also signifies the birth of the NVIDIA HGX topology. Server vendors can place any metal around it as needed, configuring components such as RAM, CPU, storage, and more. All of this, with the prerequisite that the GPU portion adheres to the fixed topology of the NVIDIA HGX base.

With the help of the NVIDIA HGX board, the extensive engineering work required to connect eight GPUs to high-speed NVLink and PCIe switching structures has been eliminated. It allows OEM partners to build custom configurations while NVIDIA can price the HGX motherboard at a higher profit margin. NVIDIA’s DGX targets differ from many OEMs as DGX is used for high-value AI clusters and the ecosystem surrounding these clusters.

NVIDIA DGX and NVIDIA HGX are two GPU platforms designed for different needs and application scenarios. DGX provides a fully integrated solution suitable for rapid deployment and high-performance deep learning tasks. HGX, on the other hand, offers greater flexibility and scalability, catering to data centers and cloud computing environments that require customization and flexible configurations. Regardless of the chosen platform, NVIDIA’s technology and innovation deliver exceptional GPU computing power and deep learning support to users.

In this process, it is worth mentioning the brand NADDOD. NADDOD is a leading provider of comprehensive optical networking solutions, offering innovative, efficient, and reliable optical networking products and solutions. Our products and AI netwoking solutions are renowned for their high quality and outstanding performance, widely used in industries and critical fields such as AI data center, high-performance computing, data centers, education and research, biomedicine, finance, energy, autonomous driving, internet, manufacturing, and telecommunications.

As a customer-centric supplier, we provide AOC/DAC/optical modules, switch, smart NICs, DPUs, and GPU integrated solutions. With low cost and excellent performance, we significantly enhance customers’ business acceleration capabilities. With a professional marketing and technical team and abundant project implementation experience, NADDOD has earned the trust and favor of customers in the field of optical networking and high-performance computing.